Numerical methods for solving a nonlinear equation: The bisection method

The bisection method

The bisection method

The bisection method, also called binary search (bisection is Latin for division in two), is a special case of a method in which the search area for a solution of a non-linear equation is systematically reduced in steps.

We start with a continuous function \(f(x)\) on the closed interval \([a,b]\) with \(f(a)\cdot f(b)<0\).

In other words, \(f(a)\) and \(f(b)\) have different signs and continuity means that you can draw the graph of \(f\) on \([a,b]\) without having to take the pen off the paper. Then the mean value theorem informs us that at least one zero of \(f\) can be found on the open interval \((a,b)\).

All we have to do is systematically reduce the interval in which we search for a zero. In the bisection method, this is done—nomen est omen—by halving repeatedly subintervals of \([a,b]\) and determining in each step which interval contains at least one zero.

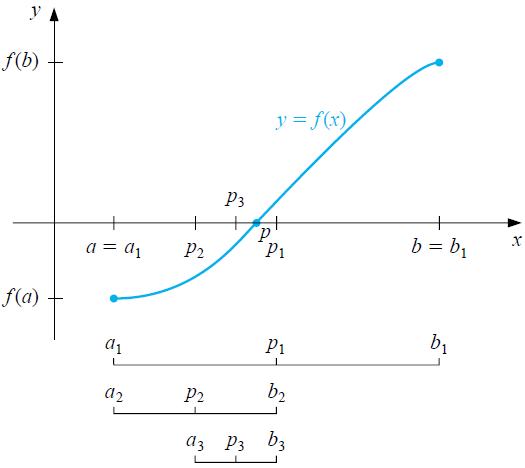

At the beginning, the interval is equal to \([a,b]\) and you first investigate whether the number \(p_1=\tfrac{a+b}{2}\) is a zero of the function \(f\). If this is the case you are done because a zero has been found; if not, split the interval into left and right subintervals, \([a,p_1]\) and \([p_1,b]\), respectively. At what interval the search for a zero continues depends on the sign of \(f(p_1)\). If this sign is opposite to that of \(f(a)\) choose the left interval, otherwise continue with the right interval. You repeat this halving process until you have reached a zero or half the length of the interval is smaller than a predetermined value. In the latter case, you choose the middle of the last found interval as an answer to an approximation of the zero of the function. The figure below illustrates the first iterations in the bisection method to find a zero\(p\) of a function \(f\).

So what you get is a sequence of intervals \[[a_1,b_1], [a_2,b_2], [a_3,b_3], \ldots\] and a sequence of approximations to a zero \[p_1, p_2, p_3, \ldots \] with \(a_1,a_2,a_3,\ldots\) an ascending sequence of numbers starting at \(a_1=a\), and \(b_1,b_2,b_3,\ldots\) a descending sequence of numbers starting at \(b_1=b\) such that \[|b_1-a_1|, |b_2-a_2|, |b_3-a_3|, \ldots\] is a strictly decreasing sequence of nonnegative real numbers. According to the above rule, we stop as soon as (coincidentally) a zero is found ( \(f(p_n)=0\) for a certain \(n\) ), or after \(n\) steps if \[\left|\frac{b_n-a_n}{2}\right|<\epsilon\] for a preselected tolerance \(\epsilon\). In other words, we stop in the bisection method when the truncation error is less than the desired accuracy.

Interactive version of the bisection method In the interactive diagram below you can observe the first 10 iteration steps in the bisection method by clicking the Next button. A restart can done via the Reset icon or by entering (again) the function or the border points.

There are, of course, other criteria for stopping to come up with.

For example, you can look at the relative truncation error \[\left|\frac{b_n-a_n}{a_n+b_n}\right|<\epsilon\] or pre-set the number of iterations \(n\) to the smallest natural number such that \[\frac{b-a}{2^n}< \epsilon\tiny.\] In the latter case you know for sure that the truncation error is less than \(\epsilon\), but if you are lucky the result will be more accurate. In any case, it is always a good idea to limit the number of iterations in a numerical algorithm: this prevents endless iteration loops.

The number of iterations required to find an answer of a certain accuracy is a measure of the method's efficiency. The speed at which the series of approximations \(p_1, p_2, \ldots\) gets closer to the zero, i.e. converges to the zero, is called the convergence speed of the method. You investigate the convergence speed by checking how many iterations are needed to find the approximation within the specified tolerance at different, ever-decreasing tolerances. Usually this is done by systematically reducing the tolerance according to a sequence \(10^{-1}, 10^{-2}, 10^{-3},\ldots \) so that more and more decimal places become correct. The convergence is linear if the relationship between the number of iterations and the number of correctly calculated decimal places is linear. This is the case in the halving method and, moreover, the truncation error halves with every iteration.

Example of the bisection method The polynomial function \(f(x)=x^3+2x-1\) has a zero on the interval \([0,1]\) because \(f(0)=-1\) and \(f(1)=2\). The bisection method successively gives the intervals \([a_n,b_n]\) and approximations \(p_n\) from the table below.

\[\begin{array}{lllll} \\ \hline {n} & a_n & b_n & p_n & f(p_n) \\ \hline

1 & 0.0 & 1.0 & 0.5 & \phantom{-}0.125000 \\

2 & 0.0 & 0.5 & 0.25 & -0.484375 \\

3 & 0.25 & 0.5 & 0.375 & -0.197266 \\

4 & 0.375 & 0.5 & 0.4375 & -0.041260 \\

5 & 0.4375 & 0.5 & 0.46875 & \phantom{-}0.040497 \\

6 & 0.4375 & 0.46875 & 0.453125 & -0.000713 \\

7 & 0.453125 & 0.46875 & 0.460938 & \phantom{-}0.019807 \\

8 & 0.453125 & 0.460938 & 0.457031 & \phantom{-}0.009526 \\

9 & 0.453125 & 0.457031 & 0.455078 & \phantom{-}0.004401 \\

10 & 0.453125 & 0.455078 & 0.454102 & \phantom{-}0.001843\end{array}\] The exact zero within the interval is \[\frac{1}{6}\,\sqrt[3]{108+12\sqrt {177}}-4{\frac {1}{\sqrt[3]{108+12\sqrt {177}}}}\] and an approximation in 10 decimal places is \(0.4533976515\). The relative rounding error of the found answer is then approximately \(0.00155\) and so the found answer is correct in at least 3 significant digits. Note that the earlier approximation \(p_6\) is closer to the actual zero.

It is tempting to look at the sequence \(p_1, p_2, p_3 \ldots\) of the middles of the consecutive intervals and use one of the stopping criteria below: \[\begin{aligned} |p_n-p_{n-1}| &< \epsilon \\ \\ |f(p_n)| &< \epsilon \\ \\ \left|\frac{p_n-p_{n-1}}{p_n}\right| &< \epsilon\end{aligned}\] But each of these criteria has its problems, as we will see in exercises. For example, it is possible that a sequence \(p_1, p_2, \ldots\) has values that get closer and closer to each other without the sequence itself coming close to a fixed value. Also, small function values are no guarantee at all that you are close to a zero on the function's domain.