Calculus: What are derivatives and why should we care?

Univariate Derivatives

Univariate Derivatives

Motivation In this theory page, we introduce a visualization for computing derivatives of functions \(f\;\mathbb{R}\to\mathbb{R}\). It will not only form the basis for both computing higher dimensional derivatives, but also will get you in the habit of thinking graphically about functions. This will be especially useful when doing deep learning later.

Let us start nice and easy with basic functions over the reals as you have encountered them before, i.e. functions \(f: \mathbb{R} \to \mathbb{R}\). Though this initially may look superfluous, we will introduce a visual way of representing these functions. This new approach will make it easier to consider multivariate functions and is commonplace in machine learning.

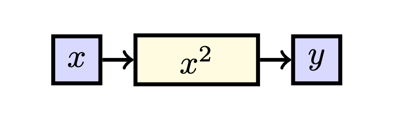

Consider the function \(f\) such that \(f: x \mapsto x^2\), i.e. the functions that squares its input. Again, our output is given by \(y = f(x) = x^2\). We can visualize this function as follows:

The blue squares represent values and the yellow rectangles represent ways to determine a value. The most important insight you should take away is that the sensitivity of \(y\) to \(x\) is given by the sum of influences of all the paths from \(x\) to \(y\). In this case, there is only one path, that is through the function \(x^2\). Using basic differentiation techniques, we hence observe that: \[\frac{\dd y}{\dd x} = \frac{\dd x^2}{\dd x} = 2x\text.\]

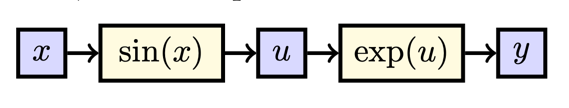

A slightly more spicy example if the function \(f: \mathbb{R} \to \mathbb{R}\) such that \(f: x \mapsto \exp (\sin (x))\). If we make a diagram of this function as above, we can represent it as follows:

Please note that we had to introduce a new variable \(u := \sin (x)\) that represents the intermediate value found after applying the sine function to \(x\). When finding the derivative of \(y\) with respect to \(x\), we again count all the paths from \(x\) to \(y\). Again, there is only one path, now going through our intermediate value \(u\). In this case, the effect of\( x\) on \(y\) is equal to the effect of \(x\) on \(u\) times the effect of \(u\) on \(y\), i.e. \[\frac{\dd y}{\dd x} = \frac{\dd y}{\dd u} \cdot\frac{\dd u}{\dd x}\text.\] You may have encountered this separation of derivatives before as the chain rule. These derivatives are quite simple, giving us \[\frac{\dd y}{\dd x} = \frac{\dd y}{\dd u}\cdot \frac{\dd u}{\dd x} = \exp(u) \cdot \cos (x) = \exp\bigl(\sin (x)\bigr)\cdot \cos (x)\text,\] where we substituted \(u = \sin (x)\) in the last step. So, we sum all the paths from \(x\) to \(y\), and we multiply the intermediate effects, e.g. if \(x\) influences \(u\) which influences \(y\), the influence of \(x\) on \(y\) is the influence of \(x\) on \(u\) times the influence of \(u\) on \(y\).

The corresponding diagram for this function is:

![]()

Here we defined \(u:=x^2, v:=\tan(u),\) and \(y:=\sqrt{v}\). The effect of \(x\) on \(f\) is given by the effect of \(x\) times the effect of \(u\) on \(v\) times the effect of \(v\) on \(y\), i.e. \[\frac{\dd f}{\dd x}=\frac{\dd y}{\dd v}\cdot \frac{\dd v}{\dd u}\cdot \frac{\dd u}{\dd x}\text.\] Using basic derivative techniques, we, therefore, conclude that \[\frac{\dd f}{\dd x}=\frac{1}{2\sqrt{v}}\cdot \frac{1}{\cos^2(u)}\cdot 2x\text.\] Using our substitutions, we can write this as \[\frac{\dd f}{\dd x}=\frac{1}{2\sqrt{\tan(x^2)}}\cdot \frac{1}{\cos^2(x^2)}\cdot 2x=\frac{x}{\cos^2(x^2)\sqrt{\tan(x^2)}}\text.\]

As you may remember, we know that when a derivative is equal to zero—i.e. when changing input \(x\) a bit has no effect on \(y\)—this must mean that we are in either a minimum or maximum of the function. This is why computing the derivative is so useful: it allows us to find these minima and maxima of functions, and if the function describes our model’s performance on a dataset given some parameters, the derivative will lead us to the parameter set that maximizes our model’s performance.

Summary In this theory page, you have seen: a graphical visualization of functions and derivatives, the chain rule of differentiation, and the sum rule of differentiation in the context of standard functions \(f:\mathbb{R}\to \mathbb{R}\).