Calculus: What are derivatives and why should we care?

Multivariate Derivatives

Multivariate Derivatives

In general, we might be interested in functions with more than one input and output. For instance, if you give one input—e.g. an MRI scan—we might be interested in predicting a series of different outputs corresponding with different kinds of observations we could predict on the scan. Similarly, maybe we might want to predict the average time someone will spend in the coffee shop we opened based on their age, type of job, hobbies, and so on. In these cases, we would still like to compute derivatives of functions to estimate how well our model does, and in this case we have derivatives for each output with respect to each input.

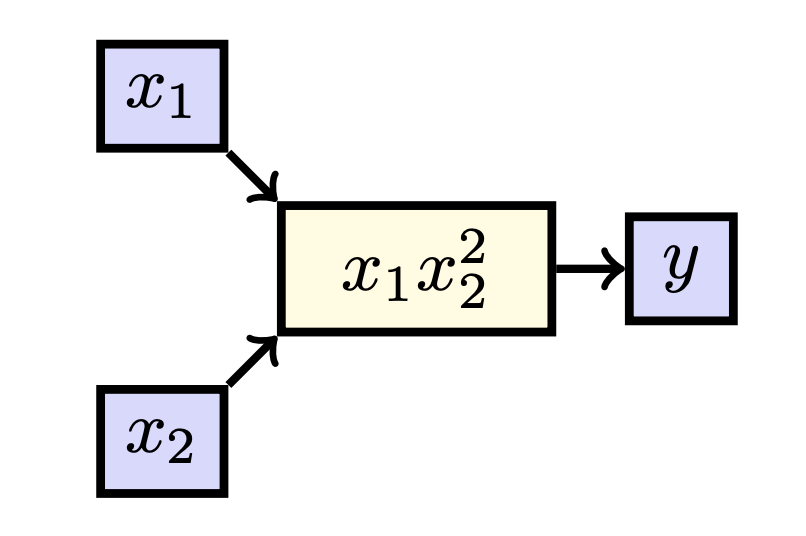

Let’s go one step further, and consider a function \(f:\mathbb{R}^2\to\mathbb{R}\) such that \(f:\cv{x_1\\ x_2}\mapsto x_1x_2^2\). We can again draw this function:

In this case, we can consider two derivatives: we can look at the effect of \(x_1\) on \(y\) and the effect of \(x_2\) on \(y\). When we can consider multiple derivatives for different variables, we do not write \(\dfrac{\dd y}{\dd x_1}\) but \(\dfrac{\partial y}{\partial x_1}\), to avoid confusion. We call such a derivative a partial derivative. Considering our earlier metaphor, a derivative of a standard real function is just the effect of turning a knob of a machine with one knob, whereas a partial derivative is an effect of turning one of the multiple knobs and keeping the other still. That is, \(\dfrac{\partial y}{\partial x_1}\) tells us how much \(y\) changes when \(x_1\) is increased while \(x_2\) is kept constant. As such, we can also treat \(x_2\) as a constant value when taking derivatives. Notice that as such we effectively have reduced the above setup to the univariate case.

In this case, we have that there is only one path from \(x_1\) to \(y\), and only one path from \(x_2\) to \(y\), giving us: \[\begin{aligned}\dfrac{\partial y}{\partial x_1}&=\dfrac{\partial x_1x_2^2}{\partial x_1}\\[0.25cm] &= x_2^2,\end{aligned}\] and \[\begin{aligned}\dfrac{\partial y}{\partial x_2}&=\dfrac{\partial x_1x_2^2}{\partial x_2}\\[0.25cm] &= 2x_1x_2.\end{aligned}\]

Gradient and Jacobian matrix We still consider a function \(f:\mathbb{R}^2\to\mathbb{R}\) such that \(f:\cv{x_1\\ x_2}\mapsto x_1x_2^2\). What we sometimes do, is write the `full' derivative \(\frac{\dd f}{\dd \boldsymbol{x}}\) as the following vector \[\begin{aligned}\frac{\dd f}{\dd \boldsymbol{x}} &=\matrix{\dfrac{\partial f}{\partial x_1} \\ \dfrac{\partial f}{\partial x_2}}\\[0.25cm] &= \matrix{x_2^2 \\2x_1x_2},\end{aligned}\] where we understand \(\boldsymbol{x}:= \cv{x_1 \\ x_2}\).

in the general case that we have functions \(f:\mathbb{R}^n\to\mathbb{R}\), we call this full derivative, which is function from \(\mathbb{R}^n\) to \(\mathbb{R}^n\), a gradient and denote it as \(\dfrac{\dd f}{\dd \boldsymbol{x}}=\nabla f(\boldsymbol{x})=\mathrm{grad}\, f(\boldsymbol{x})\). Taking \(\boldsymbol{y}=f(\boldsymbol{x})\), we also write \(\dfrac{\dd y}{\dd \boldsymbol{x}}=\nabla y(\boldsymbol{x})=\mathrm{grad}\, y(\boldsymbol{x})\).

However, in the still more general case of functions \(f:\mathbb{R}^n\to\mathbb{R}^m\) we call the resulting matrix a Jacobian matrix, denoted as \(\dfrac{\partial \boldsymbol{f}}{\partial \boldsymbol{x}}=\boldsymbol{J}_\boldsymbol{f}(\boldsymbol{x})\) and as \(\dfrac{\dd \boldsymbol{f}}{\dd \boldsymbol{x}}=\boldsymbol{J}_\boldsymbol{f}(\boldsymbol{x})\); we prefer the first notation. It is also called the derivative of transformation \(\boldsymbol{f}\). The Jacobian matrix is just the matrix which has on row \(i\) all the partial derivatives of \(f_i\) with respect to \(x_j\), i.e. \(\boldsymbol{J}_{ij}= \dfrac{\partial f_i}{\partial x_j}\). Hence, if we have one row (\(m=1\)), then the Jacobian matrix is a row vector that is equal to the transpose of the gradient, i.e., the Jacobian matrix is equal to the gradient written as row vector.

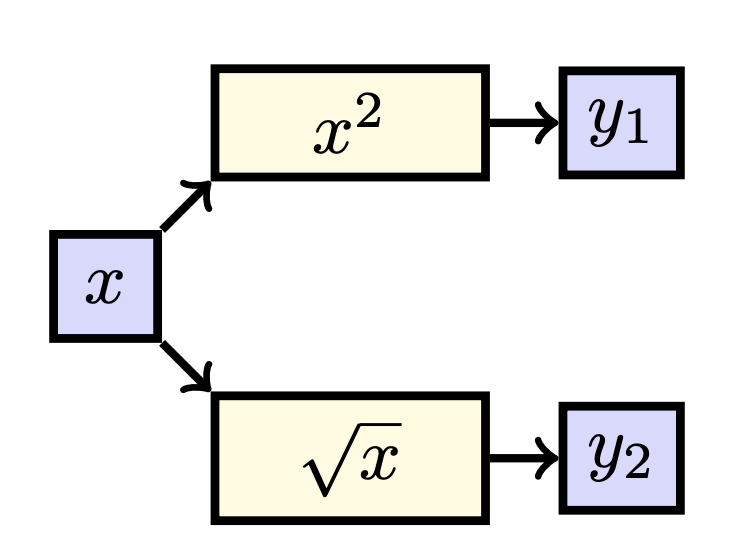

We can also have a function \(\boldsymbol{f}:\mathbb{R}\to\mathbb{R}^2\) which maps \(\boldsymbol{f}: x\to\cv{x^2\\ \sqrt{x}}\). In this case, we have that \(\boldsymbol{y}=\boldsymbol{f}(x)\) where \(\boldsymbol{y}\) is a vector (and hence is written in bold font), and thus we can consider \(y_1=x^2\) and \(u_2=\sqrt{x}\). Drawing this, we find:

When again looking at the paths, we see that \[\begin{aligned}\frac{\dd y_1}{\dd x} &= \frac{\dd x^2}{\dd x}\\[0.25cm] &= 2x,\end{aligned}\] and \[\begin{aligned}\frac{\dd y_2}{\dd x} &= \frac{\dd \sqrt{x}}{\dd x}\\[0.25cm] &= \dfrac{1}{2\sqrt{x}}.\end{aligned}\] Here we can also group the different derivatives into one matrix: \[\frac{\dd \boldsymbol{y}}{\dd x} = \cv{2x \\ \dfrac{1}{2\sqrt{x}}}\] Please note that if we have a function \(f:\mathbb{R}^n\to\mathbb{R}^m\) our Jacobian matrixwill be of the shape \(m\times n\).

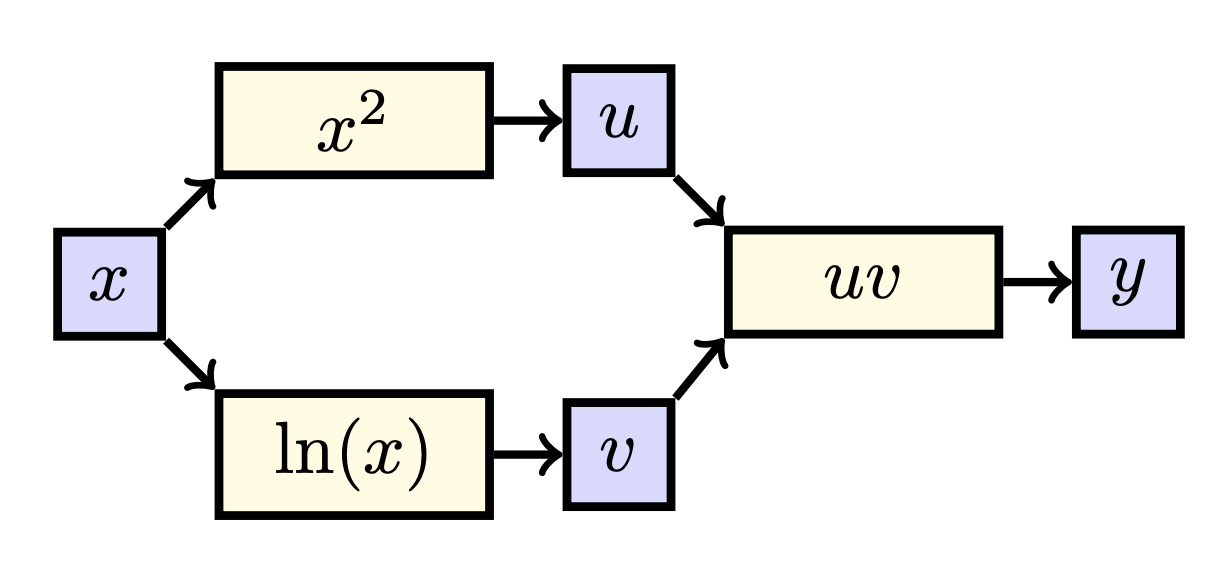

Now we are finally ready to consider a function with multiple streams of influence. Consider the function \(f(x)=x^2\ln(x)\). When breaking this functions into smaller parts, we can write it as \(y=g\bigl(\boldsymbol{h}(x)\bigr)\), where \(\boldsymbol{h}(x)=\cv{x^2\\ \ln(x)}\) and \(g(u,v)=u\cdot v\). That is, \(y\) is found by first calculating intermediate values \(u=x^2\) and \(v=\ln(x)\) and then finding \(y=u\cdot v\). When we draw these functions, we see the following:

It is now very clear that the effect of \(x\) on \(y\) is twofold: both through \(u\) and \(v\). As mentioned earlier, we need to consider all streams of influence. Specifically, we sum the different paths/effects, i.e.: \[\frac{\dd y}{\dd x}=\frac{\partial y}{\partial u}\cdot \frac{\dd u}{\dd x}+\frac{\partial y}{\partial v}\cdot \frac{\dd v}{\dd x}\text. \] Plugging everything in, we find\[\begin{aligned}\frac{\dd y}{\dd x} &=\frac{\partial y}{\partial u}\cdot \frac{\dd u}{\dd x}+\frac{\partial y}{\partial v}\cdot \frac{\dd v}{\dd x}\\[0.25cm] &= v\cdot 2x+ u\cdot \dfrac{1}{x} \\[0.25cm] &= \ln(x)\cdot 2x+x^2\cdot \dfrac{1}{x}\\[0.25cm] &=2x\left(\ln(x)+\dfrac{1}{2}\right)\text.\end{aligned}\]

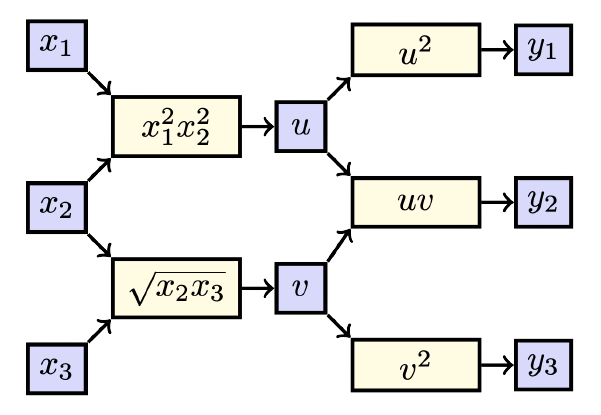

When visualizing this function, we get the following:

When counting the paths from \(x_2\) to \(y_2\), we find two paths: one through \(u\) and one through \(v\). We hence find \[\dfrac{\partial y_2}{\partial x_2}=\dfrac{\partial y_2}{\partial u}\cdot \dfrac{\partial u}{\partial x_2}+\dfrac{\partial y_2}{\partial v}\cdot \dfrac{\partial v}{\partial x_2}\text.\] Plugging our derivatives, we find \[\begin{aligned}\dfrac{\partial y_2}{\partial x_2}&=v\cdot 2x_1^2x_2+ u\cdot \dfrac{x_3}{2\sqrt{x_2x_3}}\\[0.25cm] &= 2x_1\cdot 2x_2\sqrt{x_2x_3}+\dfrac{x_1^2x_2^2x_3}{2\sqrt{x_2x_3}}\text.\end{aligned}\]

Sweet! We now know how to find derivatives in multivariate functions. As you have seen, this approach is quite a time intensive, and sometimes (especially in deep learning) it is not necessary to write out everything by hand like this. This will be the topic of the rest of this chapter.

Summary In this theory page we extended our graphical interpretation of derivatives to functions \(f:\mathbb{R}^n\to \mathbb{R}^m\).