Linear Algebra: Linear Algebra

Dot product

Dot product

Defnition A vanilla vector space does not have any other operations that involve two vectors. However, a vector space can be equipped with an inner product to form an inner product space. An inner product or dot product between vectors \(\mathbf{v}=(a_1\ldots a_n)^{\top}\) and \(\mathbf{w}=(b_1\ldots b_n)^{\top}\) is defined as follows: \[\begin{aligned}\mathbf{v}\boldsymbol{\cdot}\mathbf{w}&=a_1b_1+\cdots a_nb_n\\[0.25cm] &= \sum_{i=1}^{n}a_ib_i\end{aligned}\]

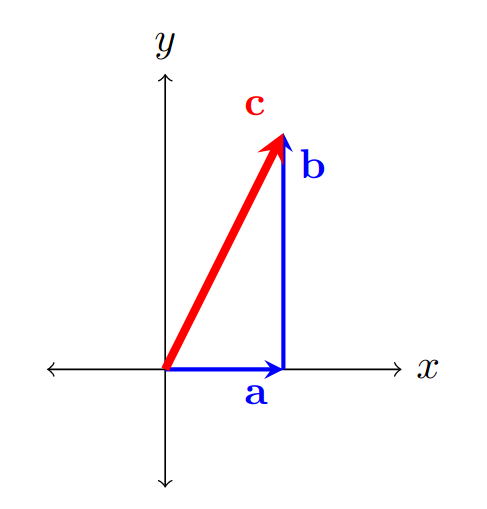

Norm of a vector To see the benefit and the interpretation of the dot product, let’s take a closer look at a case when we calculate a dot product of a vector with itself: \[\begin{aligned} \mathbf{v}\boldsymbol{\cdot}\mathbf{v} &=\sum_{i=1}^{n}a_ia_i\\[0.25cm] &= \sum_{i=1}^{n}a_i^2\end{aligned}\] What we can see from this is that this corresponds to the squared norm/magnitude of the vector \(\mathbf{v}\). The usual notation for the norm of a vector is \(\lVert\cdot\rVert\), so we can write: \[\lVert\mathbf{v}\rVert=\sqrt{\sum_{i=1}^{n}a_i^2} \implies \lVert\mathbf{v}\rVert=\sqrt{\mathbf{v}\boldsymbol{\cdot}\mathbf{v}}\] As a simple example, let’s imagine that we have a 2D vector \(\mathbf{c}=\mathbf{a}+\mathbf{b}\), where \(\mathbf{a}=(a,0)^{\top}\) and \(\mathbf{b}=(0,b)^{\top}\), as shown in the figure below:

If we calculate the dot product of the vector \(\mathbf{c}\) with itself, we get: \[\lVert \boldsymbol{c}\rVert^2=a^2+b^2,\] which is exactly the Pythagorean theorem in 2D.

Angle between two vectors Besides being useful for calculating norms of vectors, dot product can be used as a measure of similarity. If we imagine two \(n\)-dimensional vectors \(\mathbf{v}\) and \(\mathbf{w}\), the angle \(\theta\) between them can be calculated using the following formula: \[\cos\theta=\frac{\mathbf{v}\boldsymbol{\cdot}\mathbf{w}}{\lVert \mathbf{v}\rVert \cdot \lVert \mathbf{w}\rVert}\] When the cosine of the angle between two vectors is equal to \(1\), the vectors are perfectly aligned (interpreted as being as similar as possible), and when it is equal to \(0\), the vectors are perpendicular (interpreted as being as different as possible). This can be interpreted as a measure of similarity (often called the cosine similarity), which is often used in many areas, such as Natural Language Processing.

Summary We have introduced a new operation that we can use to manipulate vectors, the dot product. The dot product can be used to calculate the norm or magnitude of a vector, as well as the cosine similarity between two vectors. When the cosine similarity is 1, the vectors are perfectly aligned, while a cosine similarity of 0 indicates that the vectors are perpendicular. This is often used in various fields of machine learning.